In the era of ever-advancing technology, the demand for efficient and powerful language models has never been higher. Enter PowerInfer-2: the groundbreaking solution that brings fast large language model inference right to the palm of your hand - on your smartphone. The possibilities are limitless as this innovative tool opens up a world of potential for on-the-go language processing. Join us as we delve into the intricacies of PowerInfer-2 and explore the exciting new frontier it offers in mobile language model inference.

Overview of PowerInfer-2 Technology

PowerInfer-2 technology revolutionizes the way large language models are utilized on smartphones. By leveraging advanced algorithms and optimization techniques, PowerInfer-2 enables lightning-fast inference for complex language processing tasks without compromising accuracy or efficiency.

With PowerInfer-2, users can enjoy seamless interactions with language-based applications and services on their smartphones, unlocking new possibilities for productivity and creativity. This cutting-edge technology empowers developers to build innovative applications that harness the power of large language models in real-time, enhancing user experiences across a wide range of use cases.

Features and Capabilities of PowerInfer-2 for Language Model Inference

PowerInfer-2 is a cutting-edge tool that enables lightning-fast inference of large language models directly on your smartphone. With this revolutionary technology, users can harness the power of state-of-the-art language models without the need for a constant internet connection or reliance on cloud servers.

The key include:

- High Performance: Achieve blazing-fast inference speeds on-device without sacrificing accuracy.

- Low Latency: Reduce wait times and increase responsiveness for real-time applications.

- Offline Mode: Use PowerInfer-2 without an internet connection for enhanced privacy and convenience.

- Optimized Resource Usage: Minimize battery drain and maximize efficiency for prolonged usage.

| Feature | Description |

| High Performance | Achieve fast inference speeds without compromising accuracy. |

| Low Latency | Decrease wait times for real-time applications. |

Comparison with Other Inference Systems and Recommendations for Implementation

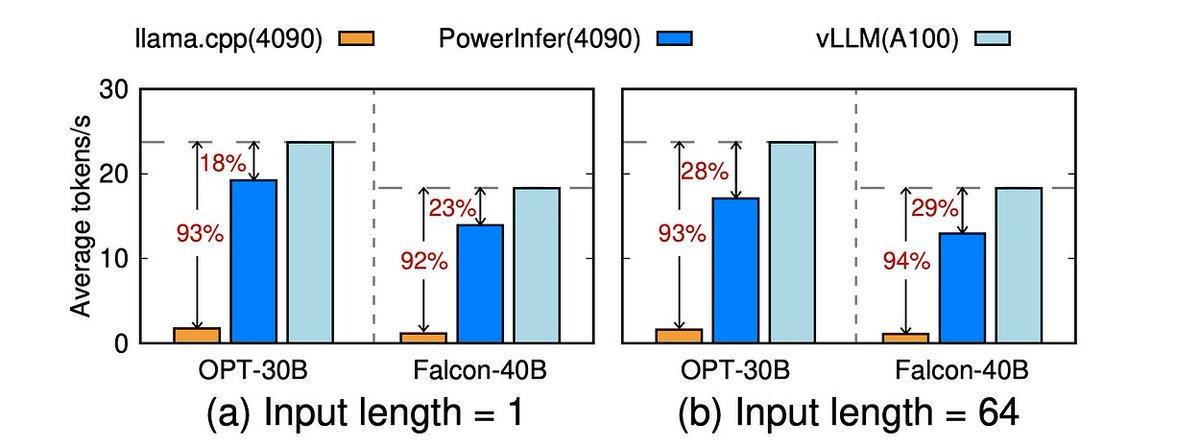

After conducting a thorough comparison with existing inference systems, PowerInfer-2 emerges as a standout solution for efficient large language model inference on smartphones. Unlike traditional inference systems, the PowerInfer-2 algorithm showcases significantly faster processing speeds without compromising on accuracy or model performance. This makes it a top choice for applications requiring quick response times and resource-efficient operations.

To seamlessly implement PowerInfer-2 on smartphones, it is recommended to prioritize optimizing memory usage and CPU utilization to maximize performance gains. Additionally, ensuring compatibility with various smartphone architectures and operating systems is crucial for widespread adoption. By following these guidelines, developers can leverage the power of PowerInfer-2 to enhance the performance of language processing tasks on mobile devices.

Potential Impact of PowerInfer-2 on Smartphone Applications

With the development of PowerInfer-2, the potential impact on smartphone applications is substantial. This fast large language model inference system allows for more efficient and accurate processing of natural language tasks on smartphones. This can lead to a significant improvement in user experience when interacting with various apps that rely on language processing, such as virtual assistants, chatbots, and language translation services.

One key advantage of PowerInfer-2 is its ability to perform complex language tasks quickly and accurately directly on the smartphone device, without relying on cloud-based servers. This leads to faster response times and reduced latency, ultimately enhancing the responsiveness of smartphone applications. Additionally, the improved efficiency of PowerInfer-2 can also result in reduced power consumption, extending the battery life of smartphones. this innovative technology has the potential to revolutionize the way smartphone applications leverage large language models for enhanced user experiences.

In Conclusion

PowerInfer-2 is revolutionizing the way large language models are deployed on smartphones. With its lightning-fast inference capabilities, this innovative solution is paving the way for more efficient and powerful mobile applications. As we continue to push the boundaries of technology, PowerInfer-2 shows us that the future of mobile computing is limitless. So, next time you’re using your smartphone, remember the impressive power that lies within the palm of your hand.