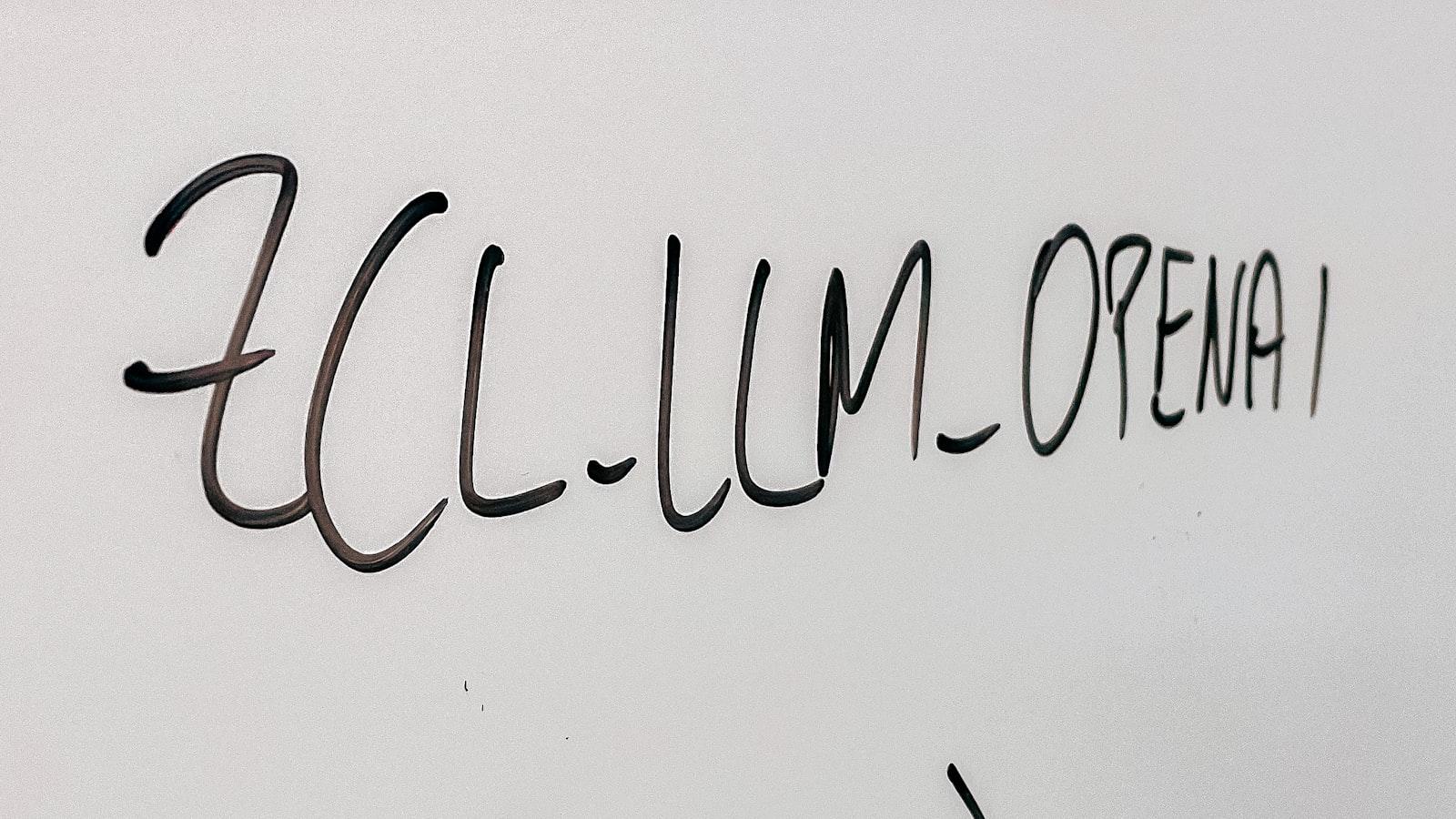

In the ever-evolving landscape of artificial intelligence, large language models (LLMs) have surged in popularity for their ability to generate human-like text. However, the efficiency of these models can often be hindered by the limitations of available memory resources. Enter “LLM in a Flash”: a groundbreaking solution that streamlines LLM inference with limited memory, paving the way for more efficient and scalable AI applications. Join us as we delve into the intricacies of this innovative approach and its implications for the future of natural language processing.

Fast and efficient large language model inference

Efficient large language model inference is crucial for a wide range of applications, from natural language processing to machine translation. In order to achieve fast and effective results, it is essential to optimize memory usage while maintaining high performance. With limited memory resources, LLM in a Flash offers a solution that combines speed and efficiency for large language model inference.

By utilizing advanced memory management techniques, **LLM in a Flash** streamlines the process of running complex language models, allowing for quick and accurate results even on devices with limited memory capacity. This innovative approach enables users to harness the power of large language models without sacrificing performance, making it an ideal solution for applications that require fast and efficient inference capabilities.

Optimizing memory usage for improved performance

When it comes to , one of the key challenges is efficiently running large language models with limited memory resources. In the realm of natural language processing, this problem is especially prevalent as the size of language models continues to grow exponentially. To address this issue, the concept of LLM in a Flash has emerged as a solution for enabling efficient large language model inference with limited memory.

LLM in a Flash leverages innovative memory management techniques to maximize the utilization of available resources while minimizing the impact on performance. By prioritizing memory allocation for critical functions and utilizing on-the-fly optimization strategies, LLM in a Flash ensures that large language models can be effectively utilized even in memory-constrained environments. With this approach, organizations can unlock the full potential of their language models without compromising on performance or scalability.

Strategies for maximizing LLM efficiency and effectiveness

When it comes to maximizing LLM efficiency and effectiveness, it’s crucial to optimize the inference process while working within the constraints of limited memory. One effective strategy is to prioritize data streaming and caching techniques to reduce the memory footprint and speed up computation. By efficiently managing memory usage, you can enhance the performance of large language models without compromising accuracy.

Another key strategy is to leverage model parallelism to distribute the workload across multiple processing units. This divides the computational burden and allows for parallel execution, resulting in faster inference times. Additionally, fine-tuning hyperparameters such as batch size and sequence length can further improve the efficiency of LLM inference. By implementing these strategies, you can achieve optimal performance from your large language models while operating within memory constraints.

Key Takeaways

In conclusion, “LLM in a Flash” provides a groundbreaking solution for efficient large language model inference with limited memory constraints. By leveraging innovative techniques and cutting-edge technology, this approach demonstrates the potential to revolutionize the landscape of natural language processing. With its ability to deliver superior performance in a compact and resource-efficient manner, “LLM in a Flash” offers a glimpse into the future of AI-powered communication. Embrace the power of efficiency and elevate your language model inference to new heights with “LLM in a Flash.”