In a world where language models reign supreme, the ability to understand and interpret vast amounts of text has never been more crucial. However, what if we told you that these large language models are not just adept at processing words, but they also excel at solving complex numerical tasks? In this article, we delve into the fascinating realm of language models as capable regressors, exploring their potential to bridge the gap between words and numbers. Dive in as we uncover the untapped potential of these powerful tools in the age of AI.

Discovering the Versatility of Large Language Models

Large language models have proven to be more versatile than we initially thought. Beyond generating realistic text or answering questions, these models can also be used as capable regressors for a wide range of tasks. By inputting structured data into the model, it can learn to predict numerical values, making it a valuable tool for data analysis and prediction tasks.

Imagine using your language model to forecast stock prices, predict customer behavior, or analyze trends in data sets. The possibilities are endless when you tap into the regression capabilities of these large language models. With the right training and fine-tuning, your model can become a powerful tool for making sense of numerical data in a way that traditional regression models may not be able to match.

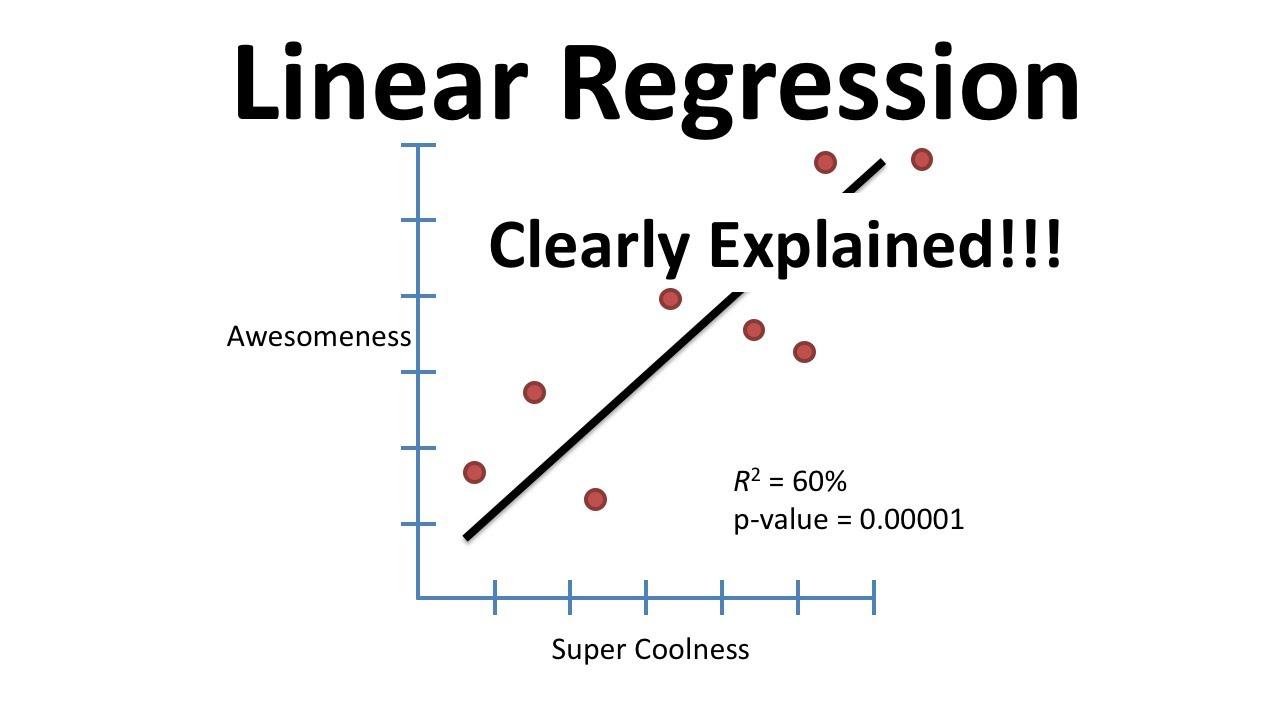

Unveiling the Regression Capabilities of Language Models

When it comes to language models, most people think of their ability to generate text based on input. However, what many may not realize is that these models also possess impressive regression capabilities. By leveraging the vast amount of data they have been trained on, large language models can effectively predict numerical values based on input variables.

Whether it’s forecasting stock prices, predicting housing prices, or estimating customer lifetime value, language models can be powerful tools for regression analysis. With their ability to understand context and relationships within data, these models can provide accurate predictions in a wide range of scenarios. By harnessing the regression capabilities of your language model, you can unlock new insights and make more informed decisions based on data-driven predictions.

Exploring the Potential Applications of Language Models in Regression Analysis

Language models have traditionally been used for natural language processing tasks such as text generation and sentiment analysis. However, recent advancements in technology have allowed us to explore the potential applications of these models in regression analysis. By leveraging the power of large language models, we can transform textual data into numerical features, enabling us to use them as predictors in regression models.

One of the key advantages of using language models in regression analysis is their ability to capture complex relationships between variables. These models can learn patterns and correlations from vast amounts of text data, allowing them to make accurate predictions in regression tasks. Additionally, language models can help us uncover hidden insights from unstructured text data, providing us with a new approach to data analysis. With the continuous improvement of language models, we are only beginning to scratch the surface of their potential in regression analysis.

Recommendations for Leveraging Language Models as Regressors

One way to maximize the potential of your large language model as a regressor is by fine-tuning it on a specific dataset that aligns with your regression task. By tailoring the model to the specific domain or context of your data, you can improve its performance and accuracy in predicting numerical values. Additionally, leveraging pre-trained language models such as BERT, GPT-3, or RoBERTa can provide a strong foundation for regression tasks due to their inherent understanding of natural language patterns and relationships.

Another key recommendation is to experiment with different architectures and hyperparameters to optimize the performance of your language model as a regressor. This could involve adjusting the number of layers, hidden units, activation functions, or learning rates to find the ideal configuration for your regression task. Additionally, considering techniques such as ensemble learning or transfer learning can further enhance the capabilities of your language model in regression scenarios. By exploring these strategies and fine-tuning your model accordingly, you can unlock its full potential as a powerful regressor in various applications and domains.

Wrapping Up

As we’ve explored in this article, the potential of large language models goes far beyond just generating text. By harnessing their ability to understand language and translate it into numerical predictions, we can unlock a world of possibilities for data analysis and regression tasks. From words to numbers, the journey of transforming language into meaningful insights is just beginning. Who knows what other hidden talents these models may possess? The only way to find out is to keep exploring, experimenting, and pushing the boundaries of AI technology. Exciting times lie ahead as we continue to unravel the mysteries of language and numbers with our capable regressors.