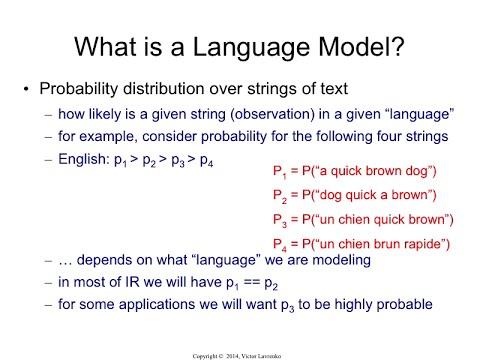

In a world where language models are becoming increasingly integral to our daily lives, ensuring their security has never been more crucial. The emergence of large language models presents a unique challenge in cybersecurity, as their complexity and vast knowledge base require innovative testing methods to identify potential vulnerabilities. In this article, we will explore the latest advancements in large language model security testing methods and delve into the importance of safeguarding these powerful AI systems from malicious attacks.

In-depth Analysis of Large Language Model Vulnerabilities

When conducting security testing on large language models, it is crucial to perform an in-depth analysis to uncover vulnerabilities that could potentially be exploited. One method that can be utilized is thorough testing of the model’s input and output mechanisms. By feeding the model with various types of inputs, including malicious inputs designed to trigger vulnerabilities, testers can observe how the model responds and identify any weaknesses.

Additionally, conducting performance testing on large language models can help uncover vulnerabilities related to the model’s ability to handle a high volume of requests. By simulating a heavy load on the model and monitoring its response times and resource utilization, testers can assess the model’s resilience to potential attacks. This can help identify weaknesses that could be exploited by attackers seeking to overwhelm the model and disrupt its functionality.

Testing for Weaknesses in Language Model Security

When it comes to , it is essential to have a comprehensive method in place. One effective approach is to conduct a series of rigorous tests aimed at identifying vulnerabilities that could potentially be exploited by malicious actors.

These tests should cover a wide range of scenarios and use cases to ensure that the language model is robust and secure. Additionally, it is crucial to employ a combination of automated tools and manual testing techniques to thoroughly evaluate the security of the model. By taking a proactive approach to security testing, organizations can better protect their data and mitigate the risks associated with using large language models.

Best Practices for Conducting Language Model Security Testing

When conducting language model security testing, there are several best practices to keep in mind to ensure the effectiveness and thoroughness of the process. One important aspect is to define clear objectives for the testing, including identifying potential vulnerabilities and assessing the overall security of the language model. This will help guide the testing process and focus on areas that are most critical for security.

Another key practice is to use a variety of testing techniques to assess the security of the language model from different angles. This can include static analysis, dynamic analysis, penetration testing, and fuzz testing. Each technique offers unique insights into the security of the model and helps uncover different types of vulnerabilities. By combining multiple techniques, you can get a more comprehensive view of the security posture of the language model.

Recommendations for Ensuring Robust Language Model Security

When it comes to ensuring the security of large language models, there are several key recommendations that can help to strengthen defenses against potential vulnerabilities. Firstly, **regular security audits** should be conducted to identify and address any potential weaknesses in the model’s architecture and implementation. This can help to prevent security breaches and ensure that sensitive data remains protected.

Additionally, **implementing robust access controls** and **encryption protocols** can help to safeguard data and prevent unauthorized access. It is also important to **monitor for any unusual activity** or **anomalies** that could indicate a security threat. By taking these steps, developers can help to ensure that their language model remains secure and robust in the face of potential cyber threats.

Concluding Remarks

In conclusion, the security testing method for large language models is an essential tool in ensuring the safety and integrity of these advanced AI systems. By continuously evaluating and improving their security measures, researchers can better protect against potential risks and vulnerabilities. As technology continues to evolve, it is imperative that we stay vigilant and proactive in our efforts to safeguard these powerful language models. Through comprehensive testing and analysis, we can pave the way for a more secure and trustworthy future in AI technology. Thank you for reading and stay tuned for more updates on this critical topic.